Basics of the industry

A data center is a specialized facility designed to house a large number of computer servers, networking equipment, and storage systems used to manage, process, store, and disseminate data.

Why Data Center is a buzz word?

Demand for DC is expected to grow due to the country’s rapid digital transformation, driven by cloud adoption, 5G deployment, and data localization laws.

- Current DC Capacity in India = 1 GW

- Expected DC Capacity by Dec 2026 = 2GW

Capex Requirement = Rs 50 crore per MW || Total Capex expected = Rs 50,000 Cr by Dec’26

Types of DC based on structure and requirement:

| Parameter | Enterprise DC | Colocation DC | Cloud DC | Edge DC |

|---|---|---|---|---|

| Ownership | Owned and operated by a single organization. | Third-party owns the facility, organizations rent space. | Owned and managed by cloud service providers (AWS, Azure). | Owned by organizations or third parties, closer to end-users. |

| Primary Usage | Used for internal business operations and private data. | Multiple organizations rent rack space for their equipment. | Provides on-demand IT resources and services over the internet. | Supports applications requiring low latency (IoT, real-time data). |

| Infrastructure | Customizable to specific enterprise needs. | Shared physical infrastructure; organization brings their own hardware. | Virtual infrastructure (compute, storage, and networking provided as services). | Smaller, distributed facilities that process data locally. |

| Scalability | Limited by physical space and capital investment. | Can scale by renting more space but may face physical limits. | Highly scalable with resources available on demand. | Scalable but typically designed for specific local needs. |

| Cost | High capital and operational expenses. | Lower than building a data center but includes rental costs. | Pay-as-you-go pricing based on resource usage. | Moderate cost, depending on size and number of sites. |

| Maintenance & Management | Managed by the enterprise’s IT staff. | Managed by the colocation provider; organizations maintain their own equipment. | Fully managed by the cloud provider, reducing in-house effort. | Limited local management, often designed for remote monitoring. |

| Redundancy | Varies by organization, usually includes backup power and disaster recovery plans. | High redundancy provided by the colocation provider. | Extremely high redundancy, often across multiple regions. | Typically, less redundant than cloud or enterprise centers, but can integrate with larger data networks. |

| Data Security | Managed by the enterprise, with customizable security layers. | Shared responsibility for physical security; users secure their own data. | Cloud providers offer built-in security features, but users have responsibility for data encryption and access control. | Typically secured locally but can connect to secure cloud environments for redundancy. |

| Energy Efficiency | Depends on the enterprise’s design and investment. | Energy efficiency optimized by the colocation provider. | Highly optimized by cloud providers for maximum efficiency. | Generally lower energy consumption due to smaller scale, but efficiency depends on design. |

| Geographic Flexibility | Limited by the location of the enterprise’s physical assets. | Colocation facilities are available in many regions worldwide. | Highly flexible, with resources available in multiple regions globally. | Located close to end-users to reduce latency, but not as geographically widespread. |

| Examples | Banks, large enterprises, and government organizations. | Small-to-medium businesses needing data center capabilities. | Tech companies, SaaS providers, global businesses (e.g., Netflix, Spotify). | IoT systems, autonomous vehicles, smart cities |

Different Tier of DC:

| Tier | Redundancy | Cooling | Power Requirement | Uptime Guarantee |

| Tier I | No redundancy (N) | Basic cooling systems | Single power path (no redundancy) | 99.671% (28.8 hours downtime/year) |

| Tier II | Partial redundancy (N+1) | Redundant cooling with backup units | Single power path with backup (N+1) | 99.741% (22 hours downtime/year) |

| Tier III | Concurrently maintainable (N+1) | Advanced redundant cooling | Dual power paths (but one active) | 99.982% (1.6 hours downtime/year) |

| Tier IV | Fault-tolerant (2N+1) | Fully redundant cooling systems | Dual active power paths (2N+1) | 99.995% (26.3 minutes downtime/year) |

Usage of Data Center:

Key Components of DC:

| Category | % of total capex | Meaning |

| Servers | 62% | Servers are the largest single product category of data center capex. The median server costs around $7,000, but higher-end servers can cost over $100,000 each. Original design manufacturers (ODMs) are companies that produce servers to their own design. For example, Google’s Tensor Processing Units (TPUs) are custom semiconductors and these are put into servers at Google Cloud data centers. Similarly, Amazon Web Services has its own custom semiconductors (Graviton) and servers. Original equipment manufacturers (OEMs) are companies building server to clients’ specifications. The largest OEM is Dell Technologies, with an estimated 12% market share of data center servers (by revenue). |

| Networking | 9% | Networking equipment includes several different pieces of equipment. Switches which communicate within the data center or local area network. Typically, each rack would have a networking switch. Routers handle traffic between buildings, typically using internet protocol (IP). Some cloud service providers use “white box” networking switches (e.g., manufactured by third parties to their specifications). AI workloads are bandwidth-intensive, connecting hundreds of processors with gigabits of throughput. As these AI models grow, the number of GPUs required to process them grows, meaning bigger networks are required to interconnect the GPUs. Cisco is market leader |

| Storage | 3% | |

| Construction & installation | 12% | Construction firms oversee all aspects of the construction project including project management, specialty contractors, material purchasing, equipment rental. These firms report lower operating margins, given the large amount of pass-through costs. The average operating margin is approximately 4%. |

| Electrical | 7% | |

| Thermal | 3% | |

| Generator | 2% | |

| Engineering | 1% | Engineers plan the electrical, mechanical, cooling, fire protection, and physical security systems of the data center. Importantly, they must understand the IT infrastructure (e.g., network, routing, storage), which has implications for the physical infrastructure requirements. |

| Total | 100% |

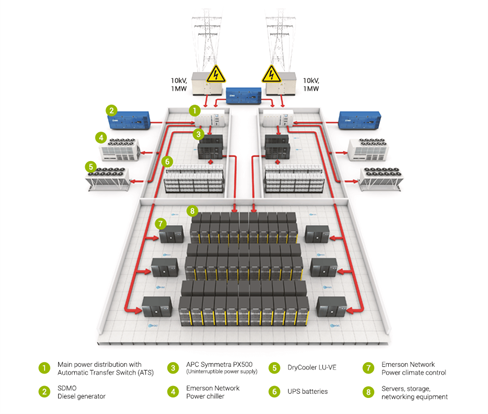

DC Visualization

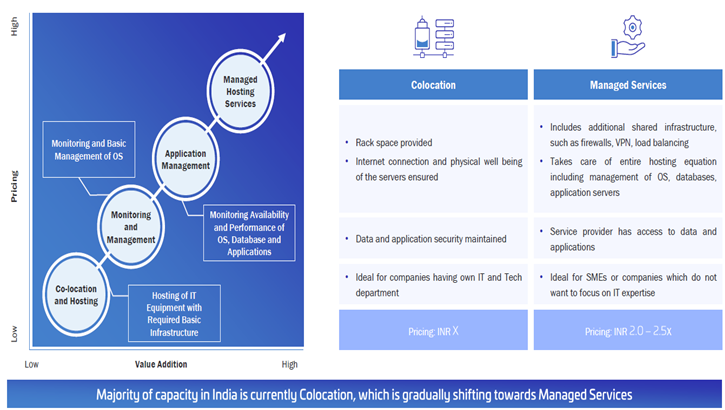

Types of Business Model for DC building:

- Build and Lease – A company or developer builds a data center and leases the space to tenants (other companies) for their data and server needs. Anat Raj, Nxtra and Yotta are key players.

- Build and Sell – A developer builds a data center and then sells it to a third party (such as an enterprise, cloud provider, or investment firm). Techno Electric is a key player.

We can read more about Techno Electric and Anant Raj to understand the granularity of the two business models for better understanding.

Basics of Data Center-

1. Compute: Compute refers to the processing power and memory required to run applications on servers. Depending on the type of workload, the server will use different types of chips, typically CPUs or GPUs. CPUs are the central processors of computers; they are good at handling complex operations and act as the main interface with software. GPUs excel in parallel processing, completing many simple operations at once. This is why they work so well for their original purpose in graphics, and why they’re well-designed for AI workloads which are made up of many small calculations.

CPU market is dominated by Intel, AMD. GPU market is dominated by Nvidia.

2. Networking: Networking enables the flow of data between servers, storage, and applications.

There are mainly 3 basic network components-

- Switches connect servers, storage, and other networking equipment; they provide the data flow between these devices. Switches facilitate communication within the same network.

- Routers connect different networks and sub-networks. As data flows in and out of the data center, routers process the flow of data so it goes to the right place. Routers provide the connection to other networks.

- Fiber-optic and cable, these are the physical cables connecting the routers, switches, and data centers to the rest of the world

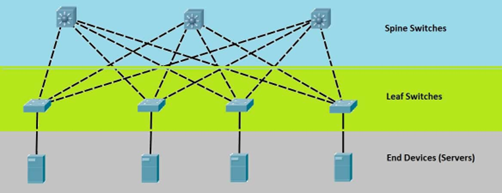

The popular model for data center networking is the spine-leaf model:

Each rack will have switches at the top of the rack (leaf switches). Those switches then each connect to several bigger switches that connect the network. An important concept is that each leaf connects to each spine. So, if one server goes down, traffic can be routed to other servers; thereby not losing service.

Two Major Networking Technologies: InfiniBand vs Ethernet

- Ethernet is slower, cheaper, and more widely adopted. Ethernet is the standard for networking everywhere like in our Wi-Fi

- Infiniband is faster, more expensive, and dominant in high-performance computing. Used to connect multiple servers or servers and storage. This reduces latency when running workloads that are processing huge amounts of data, like training LLMs.

Cisco, Arista and Nvidia (post-acquisition of Mellanox) are leading player in networking equipment.

Networking Semiconductor – These are specialized chips designed to manage data transfer across network devices, ensuring efficient, high-speed, and secure communication. These semiconductors power networking equipment like routers, switches, and servers, optimizing network performance, latency, and bandwidth.

- NICs: A Network Interface Card is the semiconductor that communicates with the switches and passes the data on to the CPU for processing.

- SmartNIC: A SmartNIC takes it a step further and removes some of the processing workload from the CPU. The SmartNIC can then communicate directly with the GPU.

- Data Processing Units (DPUs): A DPU then takes it another step further, integrating more capabilities onto the chip. The goal with the DPU is to improve processing efficiency for AI workloads and remove the need for processing from the CPU.

Broadcom and Marvell are the two largest semiconductor providers in the networking space (outside of Nvidia).

3. Storage: Within data center storage, two primary options exist: flash and disk.

- Flash, represented by solid-state drives or SSDs, is the go-to choice for high-performance computing workloads demanding fast data access with high bandwidth and low latency.

- Disks (Hard Disk Drives – HDDs) offer higher capacity but come with lower bandwidth and higher latency. For long-term storage needs, disks remain the preferred technology.

There are a few storage architectures within the data center:

- Direct Attached Storage (DAS) – Storage is attached directly to the server, only that server can access the storage.

- Storage Area Network (SAN) – A network that allows multiple servers to access pooled storage.

- Network Attached Storage (NAS) – Storage connected to a network

- Software Defined Storage (SDS) – A virtualization layer that pools physical storage, and offers additional flexibility and scalable storage.

Different Components in Value Chain-

a. Servers: Servers integrate the CPUs, GPUs, networking, memory, and cooling into one unit. Original design manufacturers (ODMs) are responsible for the design and manufacturing of a product, that other companies will buy and rebrand. An OEM will then buy that hardware, and focus on sales, marketing, and support for those products. ODM direct in this chart is when ODMs sell directly to companies like the hyperscalers.

Indian listed companies that claim to build servers are Netweb Technologies, Esconet Technologies.

b. Racks and Fibre Optic Cables: Racks are metal frames that house servers, network equipment, storage device. Standard racks are 42U tall (1U = 1.75 inches) and come equipped with cooling mechanisms to prevent overheating. Key companies are Vertiv, Schneider Electric, EatOn.

Optical fibers are crucial for high-speed data transmission between servers, storage, and networking devices. These fibers support faster data transfer rates (up to hundreds of gigabits per second) and longer distances compared to copper cables, making them ideal for modern high-performance data centers. They reduce latency and power consumption, essential for scaling data center operations. Key companies are Sterlite Technologies, Tejas Network.

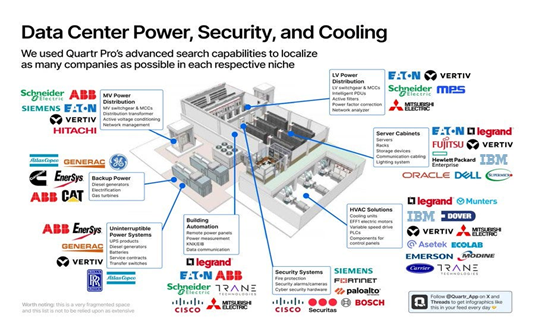

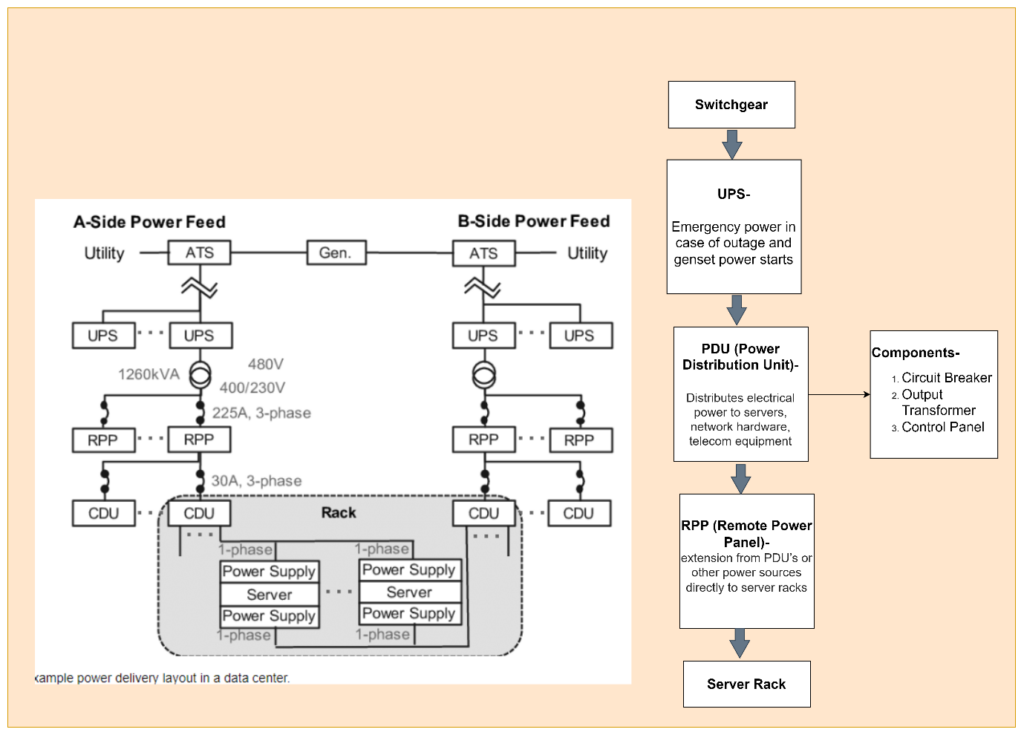

c. Power Management: Power management includes power distribution, generators, and uninterruptible power systems.

Key Components in Power Value Chain-

a. Utility Power Supply: Power comes from the main utility grid (public power infrastructure).

Power generation companies like Tata Power, Adani power could be the beneficiary.

As power and cooling cost accounts for 60% of the operational cost of DC, thus many DC operators build captive solar plants.

Solar EPC companies like SW Solar, Waaree Renewable could be a proxy of data center theme.

b. Automatic Transfer Switch (ATS): Switches power between utility and backup generators in case of utility failure. Companies like ABB, Schneider Electric, Siemens, CG Power are present in the value chain.

c. Uninterruptible Power Supply (UPS): Battery backup systems provide immediate power to critical systems during short outages or while switching to generator power. For DC, we need Flywheel UPS. Schneider Electric, Vertiv are key players.

d. Diesel Generators: Provide long-term backup power in case of prolonged utility failure, keeping the data center operational. Cummins, Kirloskar Oil Engines are key players.

e. Power Distribution Units (PDUs): Distribute electrical power from UPS and generators to servers and networking equipment. Companies like ABB, Schneider Electric, Siemens, CG Power are present in the value chain.

f. Transformers: Regulate voltage levels, converting high-voltage power to appropriate levels for data center usage. Schneider Electric

g. Power Cables: Used to transmit electric power from one component to other. Apar Industries, KEI Industries and other cable providers.

There are also other key and essential components like Surge Protection, Overhead bus system, etc. We need to understand 3rd order companies like shunt resisters building companies (Shivalik Bimetal).

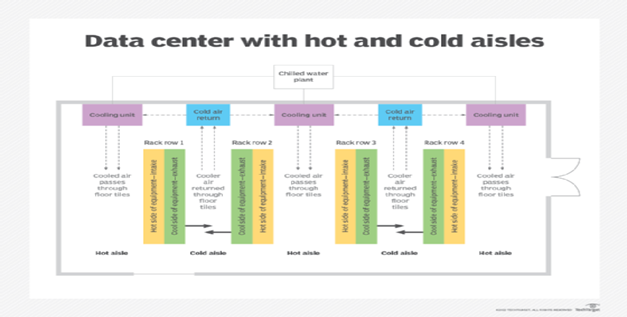

4. Cooling System: Data centers use a lot of power, which translates into heat. The more equipment that’s packed into a facility, the greater the heat generated. High temperatures and humidity levels are undesirable conditions for IT and electrical equipment. There are two types of cooling system-

Air Cooling – This cooling method is ideal for smaller data centers or older ones that combine raised floors with hot and cold aisle designs. When the computer room AC (CRAC) unit or computer room air handler (CRAH) sends out cold air, the pressure below the raised floor increases and sends the cold air into the equipment inlets. The cold air displaces the hot air, which is then returned to the CRAC or CRAH, where it’s cooled and recirculated.

Liquid Cooling: It is new and more efficient cooling system. Works well in high-density and edge computing data centers.

Types of liquid cooling –

- Liquid immersion cooling- This method places the entire electrical device into dielectric fluid in a closed system. The fluid absorbs the heat emitted by the device, turns it into vapor and condenses it, helping the device to cool down.

- Direct-to-chip liquid cooling- This method uses flexible tubes to bring nonflammable dielectric fluid directly to the processing chip or motherboard component generating the most heat, such as the CPU or GPU. The fluid absorbs the heat by turning into vapor, which carries the heat out of the equipment through the same tube.

We can read more about cooling mechanism for DC by analyzing companies like Bluestar, Vertiv.